MASSIVE M3 on Rocky Linux 2024#

As part of the security uplift, all of M3’s login, data transfer and compute nodes are being upgraded to Rocky Linux. This migration will be conducted progressively over this month of June. A pair of new front-facing (login) nodes is now available for you to access M3; we will also introduce changes to the Strudel Desktop page so you can submit to the Rocky Desktop nodes running A40 GPUs. The remaining compute nodes will be rebuilt to Rocky Linux in three phases.

The most noticeable aspect of this change is that the existing software modules on the previous OS (CentOS) are potentially unusable on Rocky Linux. You may need to update your job scripts so that a compatible module (if applicable) is loaded instead of the older one. Please see below for the table of which software modules are compatible on Rocky Linux, and if they are not compatible, we have installed a newer Rocky Linux version, or alternatively, have installed one on the OS itself.

Important

Existing CentOS nodes will be converted into Rocky Linux soon. Please take the necessary steps to future-proof your job scripts.

What is the plan?#

For this month of June, M3 will be progressively upgraded to Rocky Linux, according to this plan:

Week 2 - June 10-14 2024: announcing the new M3 login node running Rocky Linux and updates to the Strudel2 Desktop;

Week 3 - June 17-21 2024: 50% of the desktop nodes and 30% of the compute nodes will be upgraded;

Week 4 - June 24-28 2024: another batch of nodes will be upgraded, achieving 70% conversion; and

Early July 2024: the remaining nodes will be upgraded.

What has not changed#

Your username and password is as on the existing Massive M3

Your current $HOME folder and existing project / scratch folders are accessible

The SLURM job scheduler is shared on both CentOS and Rocky Linux nodes

Important Considerations with Strudel2#

We recommend starting with the A40 desktops which are available and upgraded to Rocky Linux.

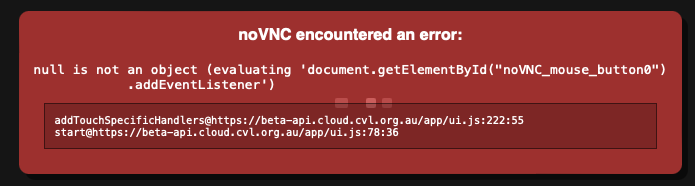

If you encounter this red-box issue when opening your desktop, simple shift reload the page so that the NoVNC button appears.

Avizo currently does not run on the A40 nodes, however, other GPU nodes will be converted to Rocky Linux in the next two weeks and these will provide Avizo capability.

How to access the Rocky Linux front-facing nodes?#

During the initial launch, the Rocky Linux login node for Massive M3 is:

m3-login3.massive.org.au

More front-facing nodes are in preparation.

How do I submit batch jobs to Rocky Linux nodes?#

The easiest way is to submit Rocky Linux jobs is to sbatch them from within the m3-login3 login node. Jobs submitted from this login node will

automatically be directed to run on the Rocky Linux nodes.

If you are submitting jobs to the existing CentOS nodes, there is no need to change

your job scripts, simply submit them from the either m3-login1 or m3-login2.

Rocky Linux capacity will increase as we convert existing nodes.

What modules are available?#

When running the module avail command, you will see three sections of installed

software modules. The first section:

---------------------------------------- /apps/modulefiles ------------------------------------------

3dslicer/5.6.2 fftw/3.3.10 hpcx/2.14-redhat9.2 molden/6.9 raven/1.8.3

anaconda/2024.02-1 freesurfer/7.4.1 hpcx/latest motioncor2/1.6.4 relion/5.0-20240320

ansys/20r2 fsl/6.0.7.10 htslib/1.19.1 motioncor3/1.0.1 rstudio/2023.12.1

The second section shows additional modules installed using Spack.

--------------------- /apps/spack/modulefiles ----------------------

hpl/2.3-gcc-11.3.1-npardb4 hpl/2.3-npardb4

Lastly, the existing CentOS software modules are shown. These modules are considered legacy and they are possibly incompatible on Rocky Linux. The table below shows what works (Compatible). Those software that are not compatible are either built fresh specifically for the Rocky Linux or they have been installed on at the operating system.

Avizo/Amira on Rocky#

Avizo is not supported on Rocky9 OS, but with the help of the developers, we have coaxed it to work on P4 nodes only. Our menus can not be updated until all nodes are updated. To access Avizo please open a Rocky9 P4 Strudel Session

Then open a terminal and type:

module load avizo

vglrun Avizo3D

Avizo does not work on Ampere GPUs. We are working on this issue, but do not have a date for when it might be ready.

How do I request software to be installed on Rocky Linux?#

Please submit a request for a software installation via the google form below: https://docs.google.com/forms/d/e/1FAIpQLScSuz80Bof2QyGUNo-6aWsUgjTcQ5ZN6H91g5R1QEkyxULhZA/viewform

Very long JupyterLab queue times#

Currently, JupyterLab on STRUDEL may still default to CentOS 7 nodes. Since very few CentOS nodes remain (as of 15/Jul/2024), this will lead to incredibly long queue times. To fix this, please toggle on “Advanced settings” and add --constraint r9 (after the sbatch, with spaces around it) to ensure you are requesting a Rocky node instead.

Note you must also add this constraint when you use srun or salloc from a login node to connect to a compute node.

sbatch: error: Batch job submission failed: Requested node configuration is not available#

Double-check that you are not asking for e.g. more CPUs, GPUs, or memory than are available on a single node in your chosen partition. Otherwise, see Very long JupyterLab queue times above since you may be accidentally requesting a Centos 7 node that no longer exists.