My HOME directory is full!#

If your HOME directory (e.g. /home/username ) is full, then many software tools will break. To resolve this:

Connect to a login node. See How to connect to M3 if I can’t use a STRUDEL desktop?.

Verify you are over quota. See The user_info command.

Identify exactly which files/directories are using up your quota. See The ncdu command.

Remove or move large files. See Common causes of your disk filling up for advice here.

Important

Your HOME quota will not be increased, so please follow the instructions below to clean up your home directory.

How to connect to M3 if I can’t use a STRUDEL desktop?#

You will need to connect to an M3 login node to clean up your home directory. There are two main methods to connect:

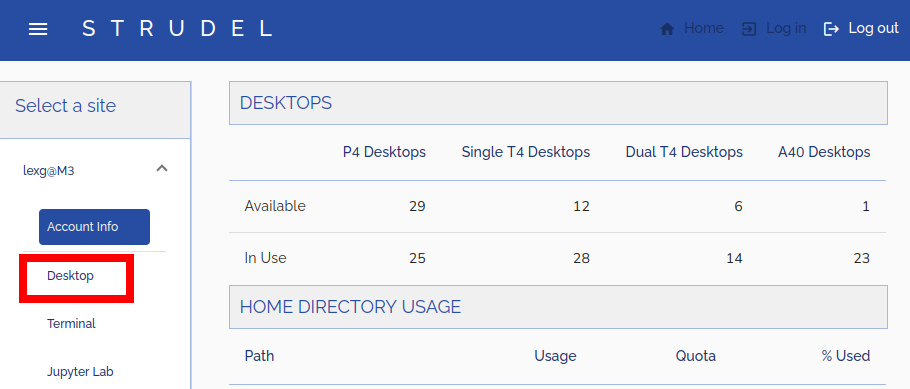

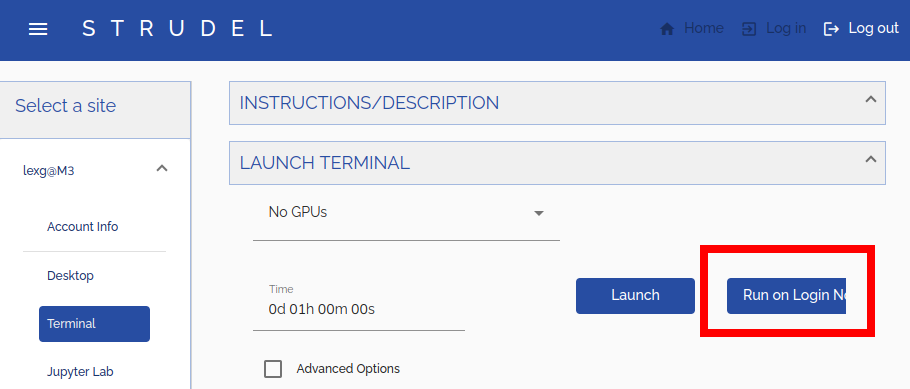

Connecting via STRUDEL: In STRUDEL, select the “Terminal” tab, then click “Run on login node” (see the screenshots below).

Connecting to M3 via ssh: connect via SSH from your own computer’s command line.

The user_info command#

user_info is a custom script on M3 that summarises your disk quota

usage for your home, project, and scratch directories. To use it, simply run user_info

in a terminal on M3.

[lexg@m3-login3 ~]$ user_info

Desktops

+-----------+---------------+----------------------+--------------------+----------------+

| | P4 Desktops | Single T4 Desktops | Dual T4 Desktops | A40 Desktops |

|-----------+---------------+----------------------+--------------------+----------------|

| Available | 32 | 15 | 7 | 2 |

| In Use | 22 | 25 | 12 | 22 |

+-----------+---------------+----------------------+--------------------+----------------+

Home directory usage

+------------+---------+---------+----------+

| Path | Usage | Quota | % Used |

|------------+---------+---------+----------|

| /home/lexg | 3 GB | 10 GB | 28 % |

+------------+---------+---------+----------+

Project Usage

+--------+------------+------------+--------+-----------+-----------+--------+

| Name | Projects | Projects | % | Scratch | Scratch | % |

| | Usage | Quota | Used | Usage | Quota | Used |

|--------+------------+------------+--------+-----------+-----------+--------|

| nq46 | 1149 GB | 2560 GB | 45 % | 3556 GB | 5120 GB | 69 % |

| pMOSP | 41217 GB | 102400 GB | 40 % | 39895 GB | 158720 GB | 25 % |

+--------+------------+------------+--------+-----------+-----------+--------+

Project File Counts

+--------+------------+------------+--------+-----------+-----------+--------+

| Name | Projects | Projects | % | Scratch | Scratch | % |

| | Usage | Quota | Used | Usage | Quota | Used |

|--------+------------+------------+--------+-----------+-----------+--------|

| nq46 | 4601094 | | N/A | 7170594 | | N/A |

| pMOSP | 6101832 | | N/A | 3231871 | | N/A |

+--------+------------+------------+--------+-----------+-----------+--------+

user_info shows you your storage quotas and how much space you are using for:

Your home directory.

Your

/projects/directories.Your

/scratch/directories.

If you are having issues with STRUDEL desktops in particular, then check if your home is over quota.

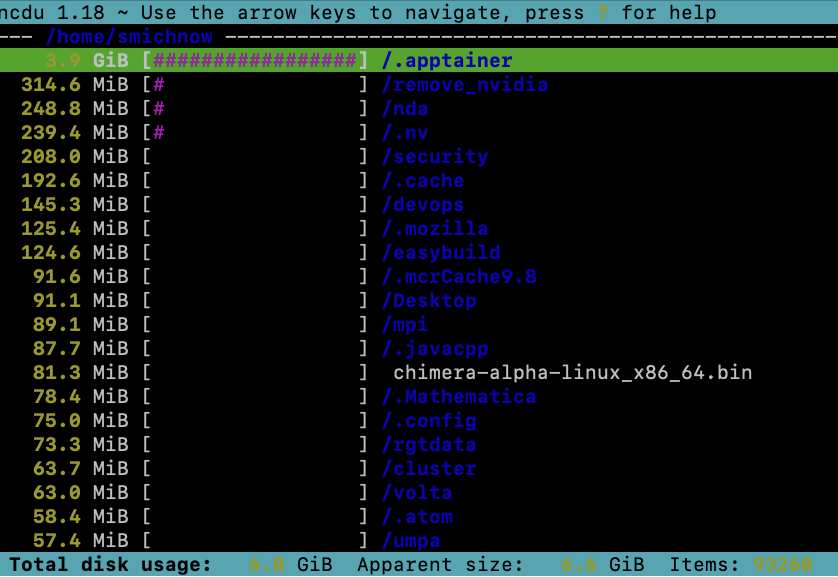

The ncdu command#

To identify which files/directories are taking up the most space, we recommend

using the ncdu command. To use it, connect to M3

with a terminal, and enter ncdu. This will traverse all files in your

current directory, and eventually open up a pretty list of these files sorted so

that the largest file/directory is at the top (see screenshot below). To navigate

these files, use the arrow keys. Press Enter to go inside a directory. Press ?

for more help.

You can also specify a path when using ncdu if you want to analyse somewhere

other than your current working directory.

ncdu /projects/n46

Once you have identified which files/directories are taking up the most space, move on to Common causes of your disk filling up.

Common causes of your disk filling up#

Large Log Files: ~/.vnc/#

Sometimes large log files can appear in your folders. The tool Strudel uses vnc that sometimes puts large log file int the ~/.vnc directory. You can delete these log files if they are large.

An example:

rm ~/.vnc/m3p007:35.log

Unfortunately there is no good solution for preventing this from occurring, but it’s rare enough that it’s not a huge issue.

Conda environments and packages: ~/.conda/#

If you have not configured Conda, it will by default store all of its packages and environments in your home directory. With enough usage, this is bound to fill up your home quota.

Important

Please do not put Virtual Environment folders in your HOME directory.

To resolve this, you should first configure Conda as in Conda on M3 (Rocky 9). Then, you have a few options.

If you just want to delete everything entirely, run the below commands.

rm -rf ~/.conda/envs

rm -rf ~/.conda/pkgs

If you instead want to save your existing environments, you can try the hack below that uses symlinks to work around your home quota. This may let you skip the configuration steps in our Conda guide.

PROJECT_ID=ab12 # Change this to your own project ID

mkdir -p /scratch/$PROJECT_ID/$USER/conda # Make a personal scratch directory

mv ~/.conda /scratch/$PROJECT_ID/$USER/conda # Move conda files into scratch

ln -s /scratch/$PROJECT_ID/$USER/conda ~/conda # Setup a soft link to new folder

ls -lad ~/.conda # Verify .conda now points to new folder

Other ways of transferring your Conda environments from home to scratch are

described in Transferring a conda environment to a different location. If you follow one of these methods,

remember to run those rm -rf commands above afterwards to clean up home.

Cache Folders:#

The software you use may create temporary cache folders (e.g. Anaconda,

or apptainer) in ~/.cache/ (and sometimes other folders in home).

If you are not currently running the corresponding program, it is generally safe

to just delete its cache. E.g. if ~/cache/pip was taking up lots of space,

you can just run:

rm -rf ~/.cache/pip

However, this will not prevent these folders from filling up again. Often, you can

set an environment variable in your ~/.bashrc to tell software to cache

files elsewhere. See the sub-sections below for how to address this for some common

software. All of these will require you to edit your ~/.bashrc using your

preferred text editor.

General advice: XDG_CACHE_HOME#

Some software will obey the XDG_CACHE_HOME environment variable

environment variable. Open up ~/.bashrc in a text editor and add:

PROJECT_ID=ab12 # Change this to your own project ID

export XDG_CACHE_HOME="/scratch/$PROJECT_ID/$USER/cache"

mkdir -p "$XDG_CACHE_HOME" # in case this cache directory doesn't already exist

Apptainer/Singularity#

Apptainer (formerly Singularity) caches files in

~/.apptainer/cache/ by default. To change this, set the APPTAINER_CACHEDIR,

e.g. add the following to your ~/.bashrc:

PROJECT_ID=ab12 # Change this to your own project ID

export APPTAINER_CACHEDIR="/scratch/$PROJECT_ID/$USER/apptainer-cache"

mkdir -p "$APPTAINER_CACHEDIR" # in case this cache directory doesn't already exist

Pip#

Python’s pip installer

caches files in ~/.cache/pip/ by default. Pip obeys XDG_CACHE_HOME.

Alternatively, you can set the PIP_CACHE_DIR environment variable

in your ~/.bashrc:

PROJECT_ID=ab12 # Change this to your own project ID

export PIP_CACHE_DIR="/scratch/$PROJECT_ID/$USER/pip-cache"

mkdir -p "$PIP_CACHE_DIR" # in case this cache directory doesn't already exist

Large data files#

By design, HOME folders have small quotas. Please put your large data files in either your scratch or project folders. Soft links can be used if your software requires them to be in the HOME dirs.

Other disk quota issues#

Sometimes when you run a program, it may say something like:

OSError: [Errno 28] No space left on device

But when you check your storage quotas using The user_info command, you seem to be well under quota for all of your directories!

In this scenario, it is usually because you are running this program on a login

node, the program was writing to /tmp/, and /tmp/ filled up.

If you are on a login node, then /tmp/ is quite small (and is shared by

everyone):

[lexg@m3-login3 ~]$ df -h /tmp

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg00-root 46G 16G 28G 37% /

Instead, you should be running this program in a SLURM job.

Then, /tmp/ should be bound to a much larger local disk (> 1 TB).

[lexg@m3s101 ~]$ df -h /tmp

Filesystem Size Used Avail Use% Mounted on

/dev/nvme0n1p1 2.9T 7.1M 2.8T 1% /tmp

Note

/tmp/ is still shared by every user on a compute node, but it’s very

rare that it ever fills up. A user’s files in /tmp/ are automatically

deleted once their job terminates.

If somehow /tmp/ is still filling up even inside of a SLURM job, then you

can try setting the TMPDIR environment

variable to a scratch directory

in your job script (or shell if using an interactive session). For example:

PROJECT_ID=ab12 # Change this to your own project ID

export TMPDIR="/scratch/$PROJECT_ID/$USER/tmp"

mkdir -p "$TMPDIR" # in case this cache directory doesn't already exist