CUDA in Python environments#

Using Conda for your Python environments#

We highly recommend you use conda environments to manage your Python environments. This guide presumes you have already configured conda on M3 according to Conda on M3 (Rocky 9).

PyTorch and CUDA#

PyTorch bundles its own CUDA so long as you follow their instructions for pip or conda installing with CUDA. To create a conda environment with PyTorch + CUDA 11.8 installed:

$ module load miniforge3/24.3.0-0

(base) $ mamba create -y --name=my-pytorch-env pytorch torchvision torchaudio pytorch-cuda=11.8 -c pytorch -c nvidia

Note

To install previous versions of PyTorch, see https://pytorch.org/get-started/previous-versions/.

Verifying PyTorch can use CUDA#

Start a job with a single GPU, e.g. with smux new-session --partition=gpu --gres=gpu:1, and do:

$ module load miniforge3/24.3.0-0

(base) $ mamba activate my-pytorch-env

(my-pytorch-env) $ python -c 'import torch; a = torch.Tensor([42]).to("cuda"); print(a)'

You should see the output tensor([42.], device='cuda:0'). If you don’t see this, see Troubleshooting.

TensorFlow and CUDA#

TensorFlow >= 2.15#

For TensorFlow (TF) versions v2.15 and above, you can install a CUDA-enabled TF with:

$ module load miniforge3/24.3.0-0

(base) $ mamba create -y --name=my-tensorflow-env python=3.11

(base) $ mamba activate my-tensorflow-env

(my-tensorflow-env) $ pip install tensorflow[and-cuda]

Note

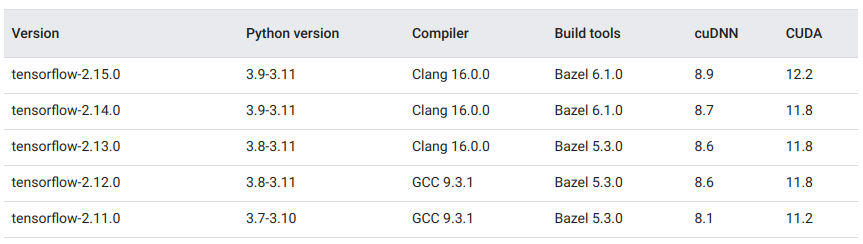

For this approach, you must start by installing a suitable Python version for your desired TF version. From https://www.tensorflow.org/install/source#gpu, we see that Python versions 3.9-3.11 are valid for TF >= 2.15.

TensorFlow < 2.15#

For TF versions < 2.15, you should install a combination of the tensorflow-gpu and cudatoolkit (includes CUDA and cuDNN) conda packages.

$ export CONDA_OVERRIDE_CUDA="11.2"

$ module load miniforge3/24.3.0-0

(base) $ mamba create -y --name=my-tensorflow-env tensorflow-gpu=2.8 cudatoolkit=11.2

Note

The command $ export CONDA_OVERRIDE_CUDA="11.2" lets you install a GPU build of TF on a non-GPU node (e.g. a login node). Ensure this version matches that of cudatoolkit in your mamba create command. To determine which version of cudatoolkit to install for a given version of tensorflow-gpu, see the table of tested configurations at https://www.tensorflow.org/install/source#gpu.

Verifying TF can use CUDA#

Start a job with a single GPU, e.g. with smux new-session --partition=gpu --gres=gpu:1, and do:

$ module load miniforge3/24.3.0-0

(base) $ mamba activate my-tensorflow-env

(my-pytorch-env) $ python -c 'import tensorflow as tf; print(tf.config.list_physical_devices("GPU"))'

You should see the output [PhysicalDevice(name='/physical_device:GPU:0', device_type='GPU')]. If you don’t see this, see Troubleshooting.

Supported versions of CUDA#

The very latest versions of CUDA may not be supported on M3. The latest supported CUDA version depends on the NVIDIA drivers currently installed on a given node. To determine this latest supported version, connect to that node, run nvidia-smi, and look for CUDA Version: X.Y. As of 14/Nov, GPU nodes on M3 support up to CUDA 12.2.

Another restriction on valid CUDA versions is the compute capability (CC) of a GPU. Each type of NVIDIA GPU supports a different range of CCs. Similarly, different versions of CUDA are compatible with different CCs. See Wikipedia - CUDA for a compatibility matrix. However, you probably don’t need to think about this unless you are installing a very new or very old version of CUDA.

Troubleshooting#

If PyTorch or TensorFlow do not appear to recognise a GPU, this could be because:

You are not on a GPU node OR did not ask for a GPU with

--gres=gpu:1. Ifnvidia-smireturns “Command not found”, then you are not on a GPU node.You have not activated your environment. Try

which pythonand verify that it’s pointing to your virtual environment.You installed a CPU-only build of PyTorch/TensorFlow.

The CUDA version being used is incompatible with your version of PyTorch/TensorFlow.

Something else has gone wrong. Look carefully at the the output to see if any errors were logged.

If you are still struggling, try searching online first. If that doesn’t help, please contact our help desk.